Conspiracy theories in digital culture

In early 2018, YouTube was blamed for promoting conspiracy theory videos containing disinformation. The spread of these theories was supported by the YouTube's recommendation system which indicates that algorithms outperform reality and even govern our access to information. Indeed, conspiracy theories seem to be more prevalent than ever, due to the Internet introducing new affordances for determining who has authority and expertise.

Of course, conspiracy theories are not a new phenomenon; they existed long before the Internet came along (Varis, 2018). Nowadays, however, the media play a big role in contributing to and promoting those theories. Therefore, using the YouTube algorithm recommendation system as a focus for this study, I will analyze conspiracy theories as part of digital culture, as well as the development of conspiracy theories online.

Conspiracy theories in mainstream media

According to Oliver and Wood (2014), conspiracy theories are a type of public, political, and historical discourse that "provides a frame of interpretation for public events" (p. 953). Conspiracy theorists see themselves as having privileged access to, and knowledge of, what they think is reality. This means possessing knowledge that others lack "which constitutes a buffer that partially protects believers against the potentially disastrous effects of disconfirming evidence" (Barkun, 2016). Consequently, conspiracy theorists are said to know the truth and represent alternative, deviant evidence that is often actively rejected and excluded from mainstream media (Barkun, 2016).

However, due to the emergence of the Internet and social media, conspiracy theories are widespread in the mainstream media. Hence, conspiracy theories get more mainstream exposure and reach a broader audience than they did in the past (Barkun, 2016). A significant aspect here is that the Internet and social media eliminate the gatekeepers who traditionally have filtered content, thus transforming those gatekeepers from active to passive agents (Barkun, 2016).

Social media platforms are not neutral actors as they promote biased content and contribute to the visibility of specific types of content.

According to Varis (2018), the Internet has contributed greatly to conspiracy theories and the exposure they get. Nowadays, people from all over the world can work together, discuss ideas with each other, and bring forward evidence to other people. As a consequence, the scale and scope of exposure of conspiracy theories has extended tremendously. Overall, the Internet introduces new affordances for deciding who has authority, expertise and visibility, and how this influences the dissemination of conspiracy theories.

Conspiracy theories on YouTube

The media themselves also influence the dissemination and visibility of conspiracy theories. As Varis (2018) points out, "the dissemination becomes a lot more visible when you are able to follow the development of a conspiracy theory on a media platform or from one platform to another." Moreover, the Internet and social media make the distribution of different kinds of content a lot easier. For instance, conspiracy theories are promoted by YouTube to heightened visibility when conspiracy theory videos appear in its Top Trending videos, thus contributing to the spread of disinformation and alternative evidence.

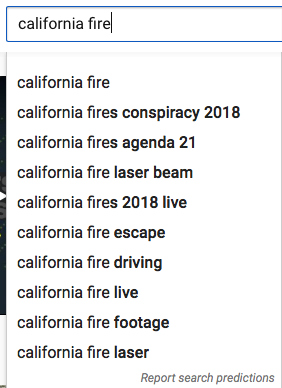

There are a lot of conspiracy theories circulating on media platforms such as YouTube. A recent example is about the wildfires in California, suggesting that these fires were caused by government lasers. YouTube can be said to have fuelled that conspiracy theory, as typing in "California fire" resulted in remarkable search suggestions such as "conspiracy 2018" and "laser beam" (see Picture 1).

Picture 1. Screenshot from YouTube regarding the California fires, taken on November 19th, 2018.

These search recommendations imply that (social) media platforms, in this case YouTube, are not neutral actors as they promote biased content and contribute to the visibility of specific types of content. As Nahon (2016) points out, "the basic elements of social media – their architecture and dynamics – are political, non-neutral and non-democratic in design, practices, and policies" (p. 5). Nahon (2016) further argues that (social) media platforms cannot be maintained without some kind of bias.

Conspiracy theories as forms of knowledge

What has also been frequently discussed in research is that media platforms enable conspiracy theories to be validated, referring to "pseudo-confirmation" or "pseudo-legitimacy" (Barkun, 2016). For instance, conspiracy theories are frequently re-posted on different media which gives those theories the appearance of validity. As a belief emerges on so many media platforms, viewers sometimes believe it must be true (Barkun, 2016). Intensity of circulation, thus, creates the suggestion of credibility.

This kind of pseudo-legitimacy can be seen as exemplifying the idea that the media contribute to the emergence of 'alternative facts (i.e. knowledge).' It is no longer only experts who produce knowledge that gains visibility in the media. Rakopoulos (2018) points to "a reign of 'alternative facts'" that seems to be more prevalent in public discourse than ever before. In fact, people tend to be suspicious of knowledge coming from official institutions and experts, and often replace it with the truth from their own individual experience and opinions.

Conspiracy theories are the disease and our media are a whole new type of carrier.

Present-day media are perfect ground for conveying alternative knowledge. That is because alternative knowledge, including conspiracy theories, has the potential, on media platforms, to be visible alongside mainstream content. Consequently, the media contribute to the destigmatization of conspiracy theories. This means that conspiracy theories are emerging as a socially acceptable and commonly discussed mode of explanation (Barkun, 2016).

Another aspect that is important to attend to here is the role of visibility in understanding the spread of conspiracy theories. Even types of knowledge that used to be invisible, can now gain visibility online due to changes in 'orders of visibility'. This refers to the idea that “some types of knowledge, as well as the practices that produce them, [become] more credible, more legitimate – and hence more visible – than others" (Hanell & Salö, 2017, p. 154). Nowadays, knowledge practices take place through many different media, "transforming in different sites and spaces, and convening different publics at different points" (Hanell & Salö, 2017, p. 5).

Algorithms influence what becomes visible online and they play an increasingly important role in selecting what information is considered most relevant to us.

More concretely, the Internet reconfigures the established orders of visibility in such a way that power relationships and levels of visibility for different types of knowledge and knowledge producers may change. This can even facilitate people’s access to knowledge. On the one hand, the Internet can facilitate the production of knowledge and making that knowledge highly visible. On the other hand, online knowledge can also easily be presented out of context or, even worse, alternative (i.e. fake) knowledge can easily be shared. Moreover, the affordances of (social) media platforms (e.g. search predictions, recommended content, and liking) make it easier for people to gain visibility and/or exercise power in making certain knowledge visible.

Algorithms promoting conspiracy theories

According to Varis (2018), conspiracy theorising online can play a big role in "solidifying certain views as 'knowledge' and constructing specific contributors and interlocutors as ‘experts’ through algorithmic and quantified knowledge-producing procedures." The algorithmic sorting of knowledge on (social) media platforms is a great example of how information is categorised to viewers. This means that algorithms influence what becomes visible online and they play an increasingly important role in selecting what information is considered most relevant to us (Gillespie, 2014). In fact, algorithms produce and certify knowledge; they represent a particular 'knowledge logic.' That is, algorithms are "built on specific presumptions about what knowledge is and how one should identify its most relevant components" (Gillespie, 2014, p. 168).

In the case of the YouTube algorithm promoting conspiracy theories, content is recommended to viewers in terms of its 'newsworthiness', rather than 'relevance'. What I mean here is that YouTube provides content to its viewers that captivates those viewers to their screens. This provides users with sensational content that makes them spend more time online which, in turn, increases advertising revenues. Consequently, YouTube produces recommendations that push users toward conspiracy theories even if they seek out just mainstream sources.

This may have a lot of impact on viewers, particularly on people who have not made up their mind about a specific subject. As conspiracies are recommended in such an implicit, gentle way, viewers may not realise how those recommendations are biased towards sensational content. If people come across these recommendations for a longer period of time, it may eventually lead them toward choices they would not have otherwise preferred.

Algorithms shape user practices and behavior, as well as lead users to internalize the norms and attitudes promoted by them

YouTube has several algorithmic elements implemented on its platform. One of them is the Trending section that shows popular videos at the top of the list. YouTube says that “the Trending system tries to choose videos that will be most relevant to our viewers and most reflective of the broad content on the platform” (YouTube's FAQ). According to YouTube's FAQ on how its Trending section works, YouTube's algorithm tries to promote videos that are appealing to a wide range of viewers and are not misleading and sensational.

There are, however, a lot of examples that prove the opposite. To give one, in February 2018, YouTube’s number one trending video was about a conspiracy theory claiming that a survivor of the Parkland high school shooting was in fact an actor (title: “DAVID HOGG THE ACTOR….”). Presumably, this video was trending since it seemed "most reflective of the broad content on the platform" (according to YouTube's FAQ). Another algorithmic element is YouTube Search in which the most 'relevant' results are displayed according to what people type in the Search Box. This contributes to viewers finding information and certifying knowledge by suggesting "do you mean..." All this therefore implies that algorithms both shape user practices and behavior, as well as lead users to internalize the norms and attitudes promoted by algorithms (Gillespie, 2014).

Seeing is believing

What is also interesting in conspiracy theorising online is the visual element, since a lot of visual documents (images and videos) are displayed as evidence (Varis, 2018). Visual 'evidence' can be very persuasive in making people believe that there is some kind of conspiracy going on, based on the idea that "seeing is believing." An example of such a visual element in a conspiracy theory circulating online is the 2018 California fires. There were photos posted online in which you see light emitting from the sky, suggesting that it was laser beams that caused the fires (see Picture 2).

Picture 2. Collection of photos from the 2018 California fire, showing light emitting from the sky.

However, online, the apparent realism of photographic images is easily open to fraud and/or deception. As Fetveit (1999) points out, "The development of computer programs for manipulation and generation of images has made it, at times, very hard to see whether we are looking at ordinary photographical images or images that that have been digitally altered" (p. 795). The Internet has made it easier to manipulate an image so that its ‘photographic truth’ is subverted. Consequently, the evidential power of photographic images is lost, since they are more context-specific and can be easily altered.

Also, another way of manipulating certain materials is through bricolage, by taking something from its original context and putting it in a new context (Milner, 2016). In fact, bricoleurs "are manipulating the bits and pieces of popular media and juxtaposing them together in ways that directly (and intentionally) defy the intended meanings of the corporate media producers" (Soukup, 2008, p. 14). Consequently, the use of visual materials seems to allow for the emergence of a "perfect" conspiracy theory as a form of knowledge. The possibility of misleading (manipulation) and playing (bricolage) is thus important to consider while evaluating the authenticity of online conspiracy theories.

Conspiracy theories as a disease

To conclude, as we have seen there are many reasons to believe that present-day media have significantly contributed to the development and visibility of conspiracy theories. They hold a lot of potential for spreading and reinforcing beliefs, mainly due to the emergence of algorithmic knowledge. Thus, for now, we can say that conspiracy theories are the disease and our media are a whole new type of carrier.

References

Barkun, M. (2016). Conspiracy theories as stigmatized knowledge. Diogenes, 1-7.

Bendix, A. (2018, November 29th). YouTube is teeming with conspiracy theories about the California wildfires. Here’s what really may have caused the flames.

Fetveit, A. (1999). Reality TV in the digital era: a paradox in visual culture? Media, Culture & Society, 21(6), 787-804.

Gillespie, T. (2014). The relevance of algorithms. In Gillespie, T., Boczkowski, P. J., & Foot, K. A. (Eds.) Media technologies: Essays on communication, materiality, and society (eds.). MIT press, 167-193.

Hanell, L., & Salö, L. (2017). Nine months of entextualizations: Discourse and knowledge in an online discussion forum thread for expectant parents. In Kerfoot, C., & Hyltenstam, K. (Eds.) Entangled discourses. South-North orders of visibility. New York: Routledge, 154-170.

Lewis, P. (2018, February 2th). 'Fiction is outperforming reality': how YouTube's algorithm distorts truth. The Guardian.

Milner, R. M. (2016). The world made meme: Public conversations and participatory media. MIT Press.

Nahon, K. (2015). Where there is social media there is politics.

Oliver, J. E., & Wood, T. J. (2014). Conspiracy theories and the paranoid style(s) of mass opinion. American Journal of Political Science, 58(4), 952-966.

Rakopoulos, T. (2018). Show me the money: Conspiracy theories and distant wealth. History and Anthropology, 29(3), 376-391.

Soukup, C. (2008). 9/11 conspiracy theories on the World Wide Web: Digital rhetoric and alternative epistemology. Journal of Literacy and Technology, 9(3), 2-25.

Varis, P. (2018, May 12). Conspiracy theorising online. Diggit Magazine.

Varis, P. [Diggit Magazine] (2018, May 21). Dr. Piia Varis on conspiracy theories and digital culture [Video File].